7 Proven Components of Successful Cloud Governance

This article discusses the seven key components of successful cloud governance that businesses must implement to minimize risks and ensure a smooth transition to the[...]

Business Lessons from Rackspace Massive Server Outage

In the early morning of Dec 2, 2022, the Rackspace email system comprising hosted Exchange Servers went down. Users started reporting issues accessing services or[...]

On-Site IT and Its Considerable Advantages to Business

Business goals achieved through digital initiatives and technologies have transformed modern enterprises. Strategies focused on reimagining processes, products, and services create a competitive advantage. Blueprints[...]

Dangers of Piecemealing IT Solutions

A piecemeal approach to anything is characterized by small, haphazard measures of uncertainty over a longer period of time. Aside from the extended time to[...]

Mitigating Potential Threats with Sound Security Protocols

As cybersecurity becomes increasingly more complex, many organizations lack the resources or knowledge they need to create an effective security strategy. That’s why you need[...]

Everything You Need To Know About Network Assessments

Some businesses may think that once your network is set up you no longer need to invest any time or resources in it, but that[...]

Benefits of Virtual Desktop Deployment

Implementing virtualized desktops across your enterprise environment can provide users with a high-definition desktop experience while helping to improve security and reduce costs. While the[...]

Four Ways Cloud Can Help Transform Your Business

For organizations seeking greater efficiency and agility, the cloud offers an increasingly appealing option. This is especially the case for smaller businesses, which are often[...]

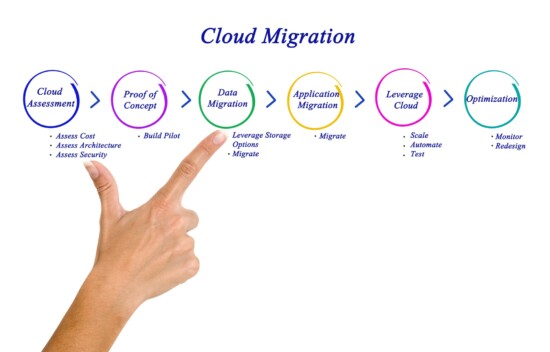

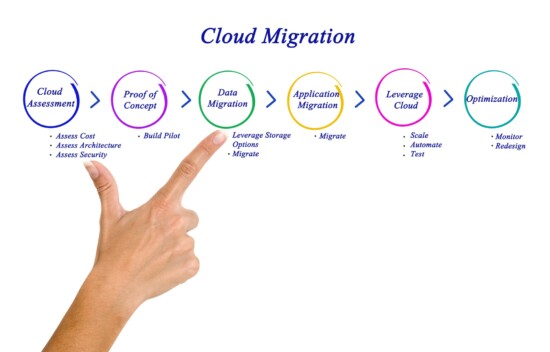

5 Key Questions to Ask Before a Cloud Migration

As businesses strive to keep pace with the demands of the digital age, many are capitalizing on the efficiency and scalability advantages of cloud computing.[...]